This anti-modernism is characterised by opposition to avatars of modernity such as democracy, liberalism and industrialisation. It often shares much of the vocabulary of the discourses it attacks. This makes anti-modernist discourse difficult to identify via simple keyword searches. We therefore need methods that help us navigate through a corpus in a systematic way to identify relevant materials. This pilot makes use of the digitised impresso newspaper corpus, which contains a large volume of source material, in terms of both quantity and variety. We present a pipeline for applying text mining to historical sources and discuss the relevance of such methods in accordance with research questions. We hope to provide insights into the iterative exploration process, with regard to both methods and corpus, showing how it can lead to preliminary results.

In this post we begin by discussing how we used topic modelling to gain a better understanding of the content of our corpus. Topic modelling is a statistical approach that identifies topics within a collection of documents based on an analysis of co-occurrences of words in a text. We show how this technique assisted us in finding a relevant subcorpus for our investigation. This is an important challenge when working with large digitised historical datasets and questions expressed with polysemic vocabulary. We then elaborate on how we used these results in conjunction with a close reading approach to select relevant articles from the corpus and create a relevant subset. In a subsequent blog post we will explain how this subcorpus was then used to train a naïve Bayes classifier that helped us to find even more relevant articles. We finally reflect on how our interaction with the source led us to revise and reframe our research questions, since it involved not only a direct analysis of individual articles but also a process of text mining designed to enhance our understanding and definition of the concepts involved.

1. Research question and corpus presentation: anti-modernism and Europe in the Swiss and Luxembourgish press before 1945

In the first half of the 20th century, Switzerland found itself at the crossroads of several issues faced by European societies: economic industrialisation and internationalisation brought substantial wealth to the country but also gave rise to deep-seated social changes. Switzerland’s integration into the global community was not only economical. During the interwar period, Switzerland and especially Geneva hosted several international diplomatic institutions, the most prominent being the League of Nations (1920-1946). The city also became the stage for international non-governmental organisations, such as the European Congress of Nationalities, composed of representatives of minorities from the newly drawn European states after the Treaty of Versailles. By 1940, Switzerland was surrounded by fascist regimes, with the exception of Liechtenstein. Since Swiss society was well connected to Italy, Germany and France, the political climate in these countries influenced the Swiss public sphere, resulting in the creation of Swiss extreme right culture, with the “helvétiste” movement and even fascist organisations. At the same time, the founding principle of neutrality and the strong pacifist tradition in Switzerland created some distance from the various international and European political changes.

Discussions on Europe therefore developed at several levels in Switzerland: both on the initiatives for building an institutional Europe and on the role Switzerland should play in them. Several intellectuals took part in the media debates on the topic; representatives came from fields as diverse as mathematics, with Sophie Piccard (1904–1990), and literature, with Gonzague de Reynold (1880–1970) – each of whom defended a particular vision of Europe. Since de Reynold was a prominent anti-modernist, the question we want to ask in this context is: was Europe used also by anti-modernists as a platform to propagate their world views? Were there particular efforts by anti-modernists to reappropriate the discussion on Europe and reframe the association of Europe with peace, progressivism and Enlightenment in the context of their own world view?

Looking at the press gives us a broader perspective on discussions about Europe in previous eras. Above all, given the limited space of the press to express ideas and the attractiveness of the medium, it is an interesting source with which to study the incarnation of ideas in the public sphere.

Our study therefore involved two stages: first, identifying specific discourses on Europe, and second, studying the presence of these discourses in the press: where, what title, what section of the newspaper and when, depending on available metadata and annotation. First, we needed to extract a subcorpus of the entire collection of digitised newspapers that would enable us to identify relevant authors. The challenge was that both Europe and anti-modernism are difficult to apprehend via keywords: as Antohi and Trencsényi explain, “Anti-modernism could be defined as (a) the negative double of modernism and (b) the critique of modernism within modernism, not outside of or separated from it.”1 We therefore referred to the six figures of anti-modernism that Antoine Compagnon defined in his authoritative study of the phenomenon in France.2 We started our pilot with the Second World War and major newspapers from Geneva and the neighbouring Lausanne, to identify anti-modern discourses on Europe in the press.

2. Subcorpus selection: a first dive into the mass of sources

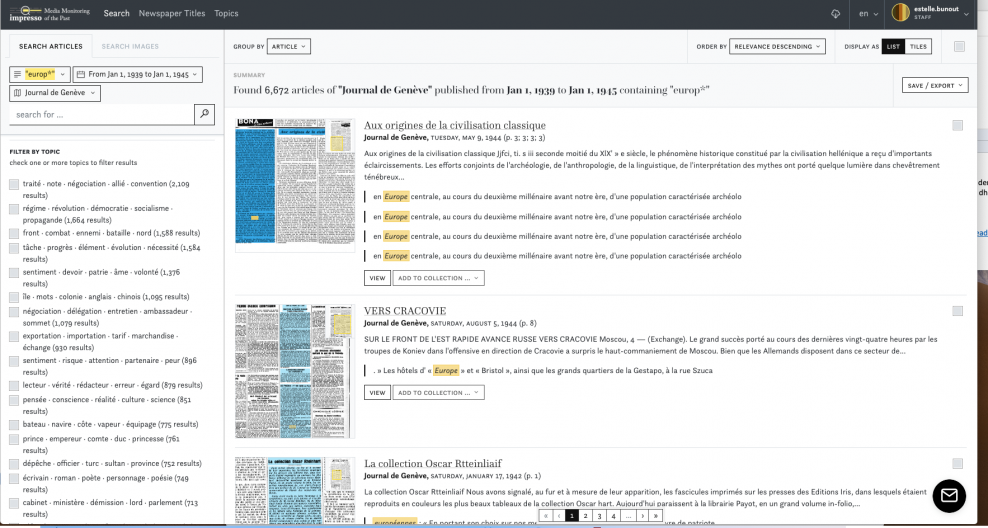

From the digitised historical newspapers in the impresso corpus, we first needed to select a subset of articles. We used two French-language newspapers: the Gazette de Lausanne and the Journal de Genève.3 Because of availability and size issues, we first focused on two titles and a limited period (the Second World War) as a pilot study, to test the efficacy of the pipeline. In order to identify articles dealing with Europe, we started with a selection of articles containing the string “europ*”, so as to include both the word “Europe” and the various French forms of the adjective “european”.

- 1. Antohi and Trencsényi, “Introduction. Approaching Anti-Modernism”, 3.

- 2. Compagnon, Les Antimodernes. The six anti-modernist figures: counter-revolution as a historical figure, anti-Enlightenment as a philosophical figure, pessimism as a moral figure, the obsession with original sin as a religious figure, the sublime as an aesthetic figure, and ranting and vituperating as a style figure.

- 3. See the entries in the Swiss historical dictionary, available in French, German and Italian: Bollinger, “Journal de Genève”; Bollinger, “Gazette de Lausanne”.

This resulted in a subset of more than 12,000 Swiss newspaper articles in these two newspapers from the period 1939-1945 that all contain at least one occurrence of the word “Europe” (“europ*”). As neither of these terms are monosemic, the corpus created by the query remains heterogeneous, with mentions of Europe in the context of sports, diplomatic events, culture and even radio programmes or advertising. The aim of this first step was to make use of the advantages offered by the digitisation of historical newspapers; not to directly limit the research corpus (in focusing for instance only on editorials) but rather to harvest the full breadth of the collection, being limited only by technical weaknesses.1

Faced with this relatively large and diverse collection of articles, we needed some way to obtain an overview of the structure of the content. Manually labelling individual articles, extracting their authors or themes was not viable within a short amount of time. How could we navigate through that first subcorpus? How could we select a relevant subset of newspaper articles expressing anti-modernist postures within the topic of Europe? As we will explain in the following paragraphs, we used topic modelling as a first step, as an auxiliary method to explore our corpus and get to know it better.

3. Topic modelling – browsing with a kaleidoscope?

In broad terms, topic modelling is a technique that shows what topics a collection of documents may be about, expressed by a list of words.2 More precisely, according to Wikipedia, topic modelling is “(…) a type of statistical model for discovering the abstract ‘topics’ that occur in a collection of documents”. In other words, topic modelling can be seen as a method for finding groups of words in large collections of documents that represent the different aspects of the information in the collection. Topic modelling aims to reduce the complexity of large corpora of texts by representing every document as a combination of word clusters, or topics.3

Topic modelling fits the basic epistemological requirement of our pilot project: it adopts the hypothesis that topics are expressed with similar words, i.e. that topics cut across texts and are not specific to, for example, one particular author. Words themselves can also be indicative of several topics, depending on their context.4 Topic modelling can therefore be used as a proxy to study public discourses, since it is in line with the hypothesis that historical actors may share similar vocabulary to express similar topics at any given time.

Topic modelling has become increasingly popular in the humanities, history and social sciences.5 One significant advantage of topic modelling is that, while it reduces complexities, it is still based on the corpus itself and informs us about the use of words and the combinations of words that are the most frequent. It is data-driven, i.e. it relies on the documents themselves and does not depend on predefined thematic categories. It thus helps to provide an overview of a collection of documents without the risk of anachronic typologies. The lists of words describing the topics provide a direct insight into the collection, although they may convey a misleading sense of transparency – reading the words connected by topic modelling may lead to false interpretations. They have to be used carefully and in connection with the underlying documents, and not taken at face value – as we will see later on.

All in all, this method has great potential with a research theme as elusive as “the question of Europe in the press” because of its polysemy. With topic modelling, we aim to “index” individual articles according to the association of the word Europe with other (generic and polysemic) words and see if this combination can give us some indication of discourses on Europe. In other words, topic modelling is used here to explore the corpus and identify subcollections or clusters of semantically related articles.

Beyond the revolutionary mystique

In his recently published book Enumerations, Andrew Piper points to the revolutionary mystique that surrounds the application of topic models within digital humanities or history research. Piper states that topic modelling is not “doing something digital” per se. The basic intuition behind topic modelling reflects a much older tradition of deriving coherent categories of meaning from a surplus of (textual) information, such as indexes, dictionaries, manual annotations and encyclopaedias. In the past, this surplus was often seen from the single individual’s perspective: what can a person read? The availability and use of large digital or digitised datasets nowadays, accounts for the computational implementation of topic modelling that we now use. It is however important to be aware of the fact that topic modelling actually has a distinct, pre-computational history. Topic modelling, in that sense, is just another approach to indexing human knowledge, in the same vein as dictionaries, for example.6

There are some important differences, however. Our traditional human approach to categorising “topics” takes place in a rather closed or predefined system. These topics are a product of human interpretation and categorisation, framed by an ontology. But computational topics are not predefined, making them more open and less clear-cut. Computational topics consist of different words with different intensities. Topic models do not have built-in understanding of any human knowledge or language. The topic in topic models is not a fixed or clear-cut entity present in documents, but a more complex, layered and diverse semantic field or space. Researchers are therefore free to interpret a topic model as they see fit. Within each document lies the possibility of every single topic. Therefore each topic contains the very seeds of every other topic, and topic modelling explicitly models the potential polysemy of words.7 This is a particularly interesting feature for corpus exploration in historical research.

A critical side note on topic modelling

It must be pointed out that topic modelling has some major drawbacks. The probabilistic nature of topic modelling (using the LDA algorithm,8 as we did) means that the output is subject to chance variation. It can be compared to rolling a dice: the output may differ every time you run the algorithm and generate a model. Even with the same corpus and the same parameter settings, the topic set that the LDA model generates as output is never exactly the same as another trained model. Topic modelling is also very sensitive to parameterisation. This means that tweaking the parameter settings (e.g. the number of topics) in the training phase can alter your results. Even small tweaks in parameter settings can lead to a different set of topics. This explains why topic modelling has been compared to a kaleidoscope, and why we must be cautious in relying (only) on topic modelling outputs for analytical purposes.9

In general, training the model with a higher number of topics results in these topics being broken down into smaller topics containing (fragments of) specific language use, or focusing on given people, organisations or subthemes. As the overall number of topics increases, so does the number of topics covering each (increasingly small) subject in the corpus. In a model with ten topics, for example, one Mediterranean/Southern-European topic will appear, containing words on both Spain and Italy. If you set the topic parameter to 50, words related to Spain and Italy will appear in separate topics. With a higher number of topics, the topics contain more aspects of the same subject, or more ways of speaking or writing about it.10

Although quantitative or statistical measures have been developed to calculate the best statistical fit when defining the optimal number of topics, we took a heuristic approach to meet the demands of our pilot when selecting the number of topics.11 We chose parameters that, based on the output, proved to be sufficient. More generally, for analytical applications, we consider it problematic that LDA topic modelling seems to be a highly non-reproducible approach.12 For exploratory purposes, however, topic modelling proved to be helpful in creating a relevant subcorpus for our project by finding relevant material and excluding less relevant articles.

4. Output of topic modelling: the topics

Training models

We trained a topic model on the 12,382 newspaper articles using MALLET (LDA) and eventually set the parameters to 100 topics.13 We defined a list of stop words, enhanced by our first round of topic modelling, that helped us identify some very frequent OCR mistakes or stop words that were parasitising the creation of readable topics. These stop words were removed from the corpus before training the topic model.

Reading and labelling the topics

After several rounds of experimenting with parameters, we reached a balance point where the topics seemed both to be understandable and to contain meaningful word clusters. Topic modelling provided us with 100 different “topics”, consisting of word lists that characterise each topic. From these 100 topics, we selected 21 topics/word lists that seemed relevant for our investigation. We read the “top 20 words” list for each topic and labelled the different topics based on these words that best characterised them. As the goal was to collect relevant articles via topics that seemed to contain them, we labelled the topics as a first selection step. The labelling involved summarising the word lists according to the research question. For instance, a word list containing “cold, wind, snow…” was labelled “weather”. With this labelling process, we were already able to exclude a series of topics (and the underlying articles) that seemed to have little relevance for our research. These topics were useful in helping to reduce the number of articles to be read or inspected. Articles that scored high on these topics did not have to be read.

The topics we felt confident to exclude from a closer inspection were the ones that were very likely to contain no indication of views on the issue of Europe, or in other words occurrences in which the “europ*” character string referred to a very neutral use of the term.

Some examples of these non-relevant topics:

Weather-related:14

| topic 72 |

temp froid vent neige rature soleil pluie degr l'h relative chasse thermom gel l'air jours nocturne barom humidit diurne cembre |

Sports:

| topic 06 |

points suisse zurich bat course championnats allemagne poids cat sultats champion classement record min sec dimanche tour championnat pts d'europe |

| topic 99 |

quipe suisse match joueurs jeu quipes championnat but stade partie ligue dimanche buts premi coupe tournoi football rencontre contre hockey |

Some topics were hard to label as they consist of a combination of words that give little indication of the commonalities of the articles behind them, or containing many misidentification of words:

| topic 41 |

lune pat messager robin virginia hopkins jagger gentleman manuscrit daniel lim woolf collections mulcaster cataclysme boiteux corridor major pittard cranley |

| topic 96 |

probl int diff questions donn rents particulier sultats ressant vue rapport domaine nombreuses rentes appr exp tude ensuite veloppement souvent |

We were interested in a more political, philosophical or literature-based discourse on Europe, potentially depicting the underlying conceptions of Europe in its genealogy, mythology or historical dimension. So we looked for topics containing words, and especially combinations of words, that may express such conceptions. Here are some examples of the topics classified as being relevant for our question:

| topic 14 |

guerre l'allemagne contre nation paix peuples qu'elle arm lutte france l'angleterre int hitler temps peuple but cas sistance edm d'autres |

| topic 58 |

chr monde civilisation l'europe dieu l'homme pens l'histoire foi tienne religion l'esprit christianisme humaine entre morale moderne hommes tien non |

Next, we looked for newspaper articles containing several occurrences of the words in these topics. Instead of close reading all 12,000 articles, we only checked the ones that scored high in terms of occurrences of these relevant terms. Practically speaking, topic modelling produces two main outputs: a list of topics and their distribution among the collection. In other words, each article receives a percentage of estimated presence for each topic. We compiled the articles and their topic distribution in a document and used these topic distributions to select articles with a high percentage of a given topic for close reading. Projecting topics onto this vast collection of articles can help create a reading grid which, even if it is subject to changes because of its probabilistic nature, can be helpful for navigating a large collection. The topics and their distribution create smaller subcollections of articles, “titbits” of the corpus, that can be read.

Selecting topics and articles

The articles attached to a given topic can be selected one at a time. Each topic gives rise to a cluster of articles, linked by the frequent occurrence of words belonging to the same topic. At this stage, we ran the risk mentioned above of overestimating the homogeneity and semantic coherence of the article clusters from a human reader’s perspective. A first dive into the clusters gave us some indications about their homogeneity: do the articles deal with a similar topic or person? Are they articles of the same type? For instance, clusters about book publications may cover various themes addressed by the books but the presentation or specific stylistic vocabulary creates some overlap that explains the clustering of these articles. But in order to assess it, one has to look at the underlying articles that belong to a selected topic.

We can provide another example: when we look at the two following topics, they seem to cover very similar types of content, at least in terms of their top 20 words. It turned out, however, that the first one consisted of a cluster of articles that were mainly editorials about Europe, written by a conservative author (Gonzague de Reynold), whereas the second was exclusively articles about speeches by Winston Churchill and Roosevelt on the need for alliances of the European people and their unity against Nazi Germany.

The large amount of overlap between the top words in these topics indicates that we have chosen a number of topics that is rather high for the size of our corpus. This is illustrative of the drawbacks that we mentioned earlier, but does not decrease the usability of the topic model for our exploration. When we used the statistically calculated optimal number of topics, we discovered that the categories of most interest for us disappeared as topics from the model. With a smaller number of topics, the topics were more semantically homogeneous (the words seem to belong to one semantic field) but were also more generic, and lacked the sub-themes that are interesting for our corpus building.

Other topics created clusters of unexpected themes: the following topic collected articles reporting on ceremonies in which Europe was mentioned in context of a national celebration, in various countries such as Portugal, France and Switzerland.

| 81 |

roi anniversaire foule monie comm nationale drapeau manifestations pendance manifestation souverain patrie fid prince place fil l'occasion l'anniversaire lit discours |

At the same time, topics that seemed very coherent and understandable actually collected very heterogeneous content:

| 84 |

travail guerre tre probl pays seront uvre ouvriers service mesures plus plan reconstruction apr che production l'industrie gouvernement travaux leurs |

Outcome

To sum up:

- We started with 100 “trained” topics based on our sample dataset of more than 12,000 newspaper articles.

- A few dozen of these topics were directly excluded from the set based on expert annotation/labelling. These topic word lists contained words with seemingly low relevance for our research questions.

- This resulted in 46 potentially relevant topics, corresponding to 8,251 articles. We used these topics for closer inspection.

- Closer inspection consisted of quickly scanning the newspaper articles that had a relatively high score in terms of occurrences of words belonging to these topics (containing at least 30% of words occurring in the topic word list).

- Based on this closer inspection we selected 15 of the 46 topics as being relevant.

- The 2,242 newspaper articles that scored high on the occurrence of words from these 15 topics are potentially relevant for our research question.

- The articles were read closely, and after this manual inspection the final selection was made.

In other words, the topics functioned as filters to narrow down the number of articles that had to be manually inspected, while also creating an interesting reading order or context for those articles: from an initial list of 12,637 articles, the topic selection helped first reduce it to 8,251 articles and then to 2,424. This last set was the collection of documents that required close reading.

The article clusters created by the topic modelling set a context for close reading that is different from the way one would read a list of articles resulting from a keyword search for instance, to stay with the digital newspapers. When reading articles linked by a topic, one is looking for both relevance to the research question and commonalities between the articles. For instance, the collection of articles on the commemoration of national holidays in Portugal, Switzerland and France creates a theme out of these commemorations. More obviously, one cluster containing articles of the same type (e.g. editorials or book reviews) by a same author may be indicative of some salient stylistic elements. But it can also draw attention to other authors of articles that have been added to the same cluster. Reshaping a collection with topic modelling helps to raise new questions by creating new reading context.

This also turned out to be of heuristic value for the analysis of the collection that was developed. Several topics labelled as covering Swiss political issues were read together. For instance, topics 11 and 78 were both selected because of their promising content based on the word list: they received the same label and were closely inspected together. After this inspection, one topic was kept (T11) because it contained editorials by a Swiss conservative author who became famous in the 1930s for a book on Europe, and the other was eliminated because it contained reports on Allied speeches concerning post-war plans.

The risk with this method is losing track of what parts of the whole corpus have been read and what parts have not. This is why bringing all the topic distributions together and using them as a filter to read one segment of the corpus after another seems crucial. This is not always possible, however, depending on the size of the corpus. Also, to maintain an overview of the article clusters and how they relate to each other, some visualisation techniques may be necessary for exploratory purposes. It appeared that even with very similar topics (having several overlapping words), the overlap in articles in which these words occurred was sometimes limited.

| number of articles | % of total query | % of TM selection (first step) | |

| Query (whole collection) | 12637 | 100% | |

| Total articles from the topic selection | 8251 | 65% | 100% |

| Total articles for the close reading selection | 2424 | 19% | 29% |

5. Conclusion: Topic modelling as a (nibbling) tool to navigate through large historical digitised source collections

- 1. Chiron et al., “Impact of OCR Errors on the Use of Digital Libraries”.

- 2. Da, “The Computational Case against Computational Literary Studies”, 625.

- 3. Jacobs and Tschötschel, “Topic Models Meet Discourse Analysis”, 3.

- 4. Blei, Ng and Jordan, “Latent Dirichlet Allocation”.

- 5. Graham, Weingart and Milligan, “Getting Started with Topic Modeling and MALLET”; Weingart, “Topic Modeling for Humanists”; Weingart, “Topic Modeling in the Humanities”.

- 6. Piper, Enumerations, 68, 73.

- 7. Piper, 74; Jacobs and Tschötschel, “Topic Models Meet Discourse Analysis”, 3–4.

- 8. Blei, Ng and Jordan, “Latent Dirichlet Allocation”.

- 9. Da, “The Computational Case against Computational Literary Studies”, 625, 629.

- 10. Jacobs and Tschötschel, “Topic Models Meet Discourse Analysis”, 6.

- 11. Arun et al., “On Finding the Natural Number of Topics with Latent Dirichlet Allocation”; Jacobs and Tschötschel, “Topic Models Meet Discourse Analysis”, 5.

- 12. In the future, we want to experiment with non-negative matrix factorisation. This dimension reduction algorithm can be used to find topics among documents in a large text corpus without the probabilistic approach used in LDA topic modelling.

- 13. Graham, Weingart and Milligan, “Getting Started with Topic Modeling and MALLET”.

- 14. A side note on the advantages of topic modelling: it is not hindered by OCR mistakes, that appear also in the topics’ word lists. For instance: “cembre” is probably a frequent mistake for “décembre” or “barom” for “baromètre”. This informs us about frequent OCR mistakes and shows how topic modelling does not rely on dictionaries or lexicons but on the raw text.

From this iterative exploration, we learned that a high number of topics can produce clusters of articles with commonalities that are difficult to detect in close reading and also very homogeneous. The topics create sometimes surprising clusters that can fuel the research process by revealing co-occurrences of terms that may not initially have been considered, such as the “ceremonies” topic shown above. This method is therefore a way of structuring the reading of the source collection, facilitating the selection process and providing another means of approaching historical sources. On the one hand, this can be very fruitful and insightful. On the other, it is initially still a time-consuming enterprise. Topics that seemed to be very clear and coherent based on their top word list turned out to represent incoherent clusters of articles. This shows that topics (based on their top word lists) can be somewhat misleading. One obviously has to connect topics with the underlying articles before reaching any conclusions. The seeming coherence of certain topics cannot be taken at face value. Finally, topic modelling does not guarantee a detailed and comprehensive exploration of a corpus. This requires corpus expansion tools, as we will discuss in our second blog.

Another way to deal with the identification of relevant clusters could be by harvesting the metadata of the articles: if one could compare topics with for instance named entities or other labels given to the articles, such as “advertisement” or “feuilleton”, produced by the document layout analysis and visual segment analysis of the collection, this could provide an extra layer of information to identify relevant articles and exclude potentially less relevant ones. But this relies on other sources of information that are not always available and cannot always be produced automatically. Other ways to improve the efficiency of topic modelling may involve changing parameters, such as the use of bigrams when training the topics. This can help identify people more easily when their first and last names are captured by the bigrams or identify more explicit descriptions of words when attached to an adjective for instance.

To conclude, we believe that the outcomes of this exploration show that topic modelling has the potential to be a useful and powerful tool in the process of selecting historical sources when using large collections of digitised historical data. Although it is data-driven, probabilistic and not fully transparent or reproducible, it leaves the researcher with many possibilities for interacting with the output, both the topics and the presence of these topics in each documents (distribution). The first exploratory iterations provided us with useful suggestions for further study that we believe we would otherwise not have found. However, we would stress that they should be viewed in the context of sufficient expert historical knowledge and used with caution. In the words of computer scientist Duncan Buell: “(...) at the end one comes back to analysis by scholars to determine if there’s really anything there.”1

Bibliography

Antohi, Sorin, and Balázs Trencsényi. “Introduction. Approaching Anti-Modernism”. In Anti-Modernism : Radical Revisions of Collective Identity, edited by Diana Mishkova, Marius Turda and Balazs Trencsenyi, 1–43. Discourses of Collective Identity in Central and Southeast Europe 1770–1945. Budapest: Central European University Press, 2018. http://books.openedition.org/ceup/2969.

Arun, R., V. Suresh, C. E. Veni Madhavan and M. N. Narasimha Murthy. “On Finding the Natural Number of Topics with Latent Dirichlet Allocation: Some Observations”. In Advances in Knowledge Discovery and Data Mining, edited by Mohammed J. Zaki, Jeffrey Xu Yu, B. Ravindran and Vikram Pudi, 6118:391–402. Berlin, Heidelberg: Springer Berlin Heidelberg, 2010. https://doi.org/10.1007/978-3-642-13657-3_43.

Blei, David M, Andrew Y Ng and Michael I Jordan. “Latent Dirichlet Allocation”. Journal of Machine Learning Research 3, no. January (2003): 993–1022.

Bollinger, Ernst. “Gazette de Lausanne”. hls-dhs-dss.ch, 15 August 2005. https://hls-dhs-dss.ch/articles/024789/2005-08-15/.

———. “Journal de Genève”. hls-dhs-dss.ch, 9 February 2008. https://hls-dhs-dss.ch/articles/024799/2008-09-02/.

Buell, Duncan. “More Responses to ‘The Computational Case against Computational Literary Studies’”. In the Moment (blog), 12 April 2019. https://critinq.wordpress.com/2019/04/12/more-responses-to-the-computational-case-against-computational-literary-studies/.

Chiron, G., A. Doucet, M. Coustaty, M. Visani and J. Moreux. “Impact of OCR Errors on the Use of Digital Libraries: Towards a Better Access to Information”. In 2017 ACM/IEEE Joint Conference on Digital Libraries (JCDL), 1–4, 2017. https://doi.org/10.1109/JCDL.2017.7991582.

Compagnon, Antoine. Les Antimodernes: De Joseph de Maistre à Roland Barthes. Paris: Folio, 2016.

Da, Nan Z. “The Computational Case against Computational Literary Studies”. Critical Inquiry 45, no. 3 (March 1, 2019): 601–39. https://doi.org/10.1086/702594.

Graham, Shawn, Scott Weingart and Ian Milligan. “Getting Started with Topic Modeling and MALLET”. The Programming Historian, no. 1 (2012). https://programminghistorian.org/en/lessons/topic-modeling-and-mallet.

Jacobs, Thomas and Robin Tschötschel. “Topic Models Meet Discourse Analysis: A Quantitative Tool for a Qualitative Approach”. International Journal of Social Research Methodology 0, no. 0 (7 February 2019): 1–17. https://doi.org/10.1080/13645579.2019.1576317.

Piper, Andrew. Enumerations: Data and Literary Study, 2018.

Weingart, Scott. “Topic Modeling for Humanists: A Guided Tour”. The Scottbot Irregular (blog), 25 July 2012. http://scottbot.net/topic-modeling-for-humanists-a-guided-tour/.

———. “Topic Modeling in the Humanities”. The Scottbot Irregular (blog), 11 April 2013. http://scottbot.net/topic-modeling-in-the-humanities/.

- 1. Buell, “More Responses to ‘The Computational Case against Computational Literary Studies’”.